It has a byte array payload that must be delivered from the source to the destination and optional headers. Flume EventĮvent is the most straightforward unit of data transferred in Flume. It accepts data (events) from customers or other agents and routes it to the appropriate destination (sink or agent).

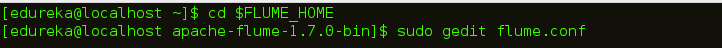

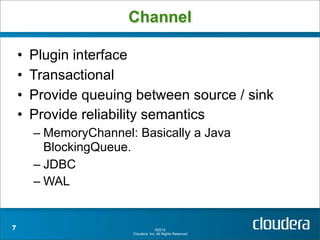

In Flume, an agent is a daemon process that runs independently. Flume works with various sinks, including HDFS Sink, Hive Sink, Thrift Sink, and Avro Sink. There is no need for a sink to give possibilities to Store alternatively, we may set it up so that it can deliver events to another agent. The Flume sink consumes events from Channel and saves them in HDFS or other destination storage. Flume Sinkĭata repositories such as HDFS and HBase include a Flume Sink. Channel events are saved in memory, and therefore, it is not long-lasting but highly rapid. The file channel is persistent, which means that once data is written to it, it will not be lost even if the agent restarts. These channels are entirely transactional and can connect to an unlimited number of sources and sinks.įlume supports the File channel as well as the Memory channel. It serves as a link between the authorities and sinks. Flume ChannelĪ channel is a transitory storage that receives events from the source and buffers them until sinks consume them. Flume supports a variety of sources, including Avro Flume Source, which connects to an Avro port and gets events from an Avro external client, and Thrift Flume Source, which connects to a Thrift port and receives events from Thrift client streams, Spooling Directory Source, and Kafka Flume Source.

#APACHE FLUME GENERATOR#

The source gathers data from the generator and sends it to the Flume Channel in the form of Flume Events. When the incoming data rate exceeds the rate at which it can be written to the destination, Flume works as a middleman between data producers and centralised storage, ensuring a continual flow of data between them.įlume Architecture consists of many elements let us have a look at them:Ī Flume Source can be found on data producers like Facebook and Twitter.It ensures that messages are delivered on time. Flume transactions are channel-based, with each communication requiring two transactions (one sender and one recipient).Flume has a feature called contextual routing.

We may store the data in any centralised storage using Apache Flume (HBase, HDFS).Let us have a look at the benefits of using Flume: We can quickly pull data from many servers into Hadoop using Flume.Flume can handle multi-hop flows, fan-in-fan-out flows, contextual routing, etc.Flume can handle many data sources and destinations.Flume is also used to ingest massive amounts of event data produced by social networking sites like Facebook and Twitter and e-commerce sites like Amazon and Flipkart and log files.Flume efficiently ingests log data from many online sources and web servers into a centralised storage system (HDFS, HBase).Features of FlumeĪpache Flume has many features and lets us have a look at some of the notable and essential elements of Flume: Apache Flume has several adjustable dependabilities, recovery, and failover features that come to our aid when we need them.

The design of Apache Flume is built on streaming da ta flows, which makes it very simple and easy to use. It features its query processing engine, allowing it to alter each fresh batch of data before sending it to its designated sink. Its design is simple, based on streaming data flows, and written in Java. IntroductionĪpache Flume is a platform for aggregating, col lecting, and transporting massive volumes of log data quickly and effectively. This article was published as a part of the Data Science Blogathon.

0 kommentar(er)

0 kommentar(er)